During the months of august and september of 2012, I worked with the residents Ciça Lucchesi and Juliana Perdigão on their project VerSom while they were in residency at Marginalia+Lab.

VerSom is a loosely defined performance and experimentation environment. It is an opportunity for Ciça, Juliana and other collaborators to explore the relationship between images and sounds.

Part of my contribution to the project was a real-time system for triggering simple animations based on the vowels being sung by Juliana.

The animation system was created in Processing based on initial sketches done by Ciça in Modul8. It consists of a few different reactive scenes and a simple control panel to enable/disable different scenes and to vary different parameters.

The control-panel/GUI:

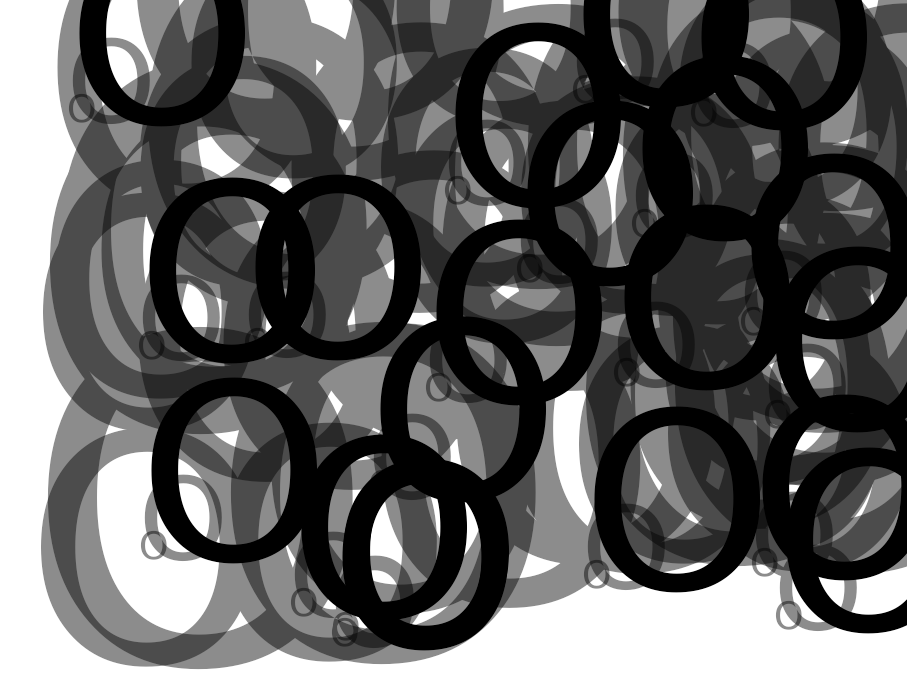

And some of the scenes. This one created ‘O’ shapes whose size varied in accordance to the volume of the voice detected by the microphone:

This one released running streams of vowels in different directions:

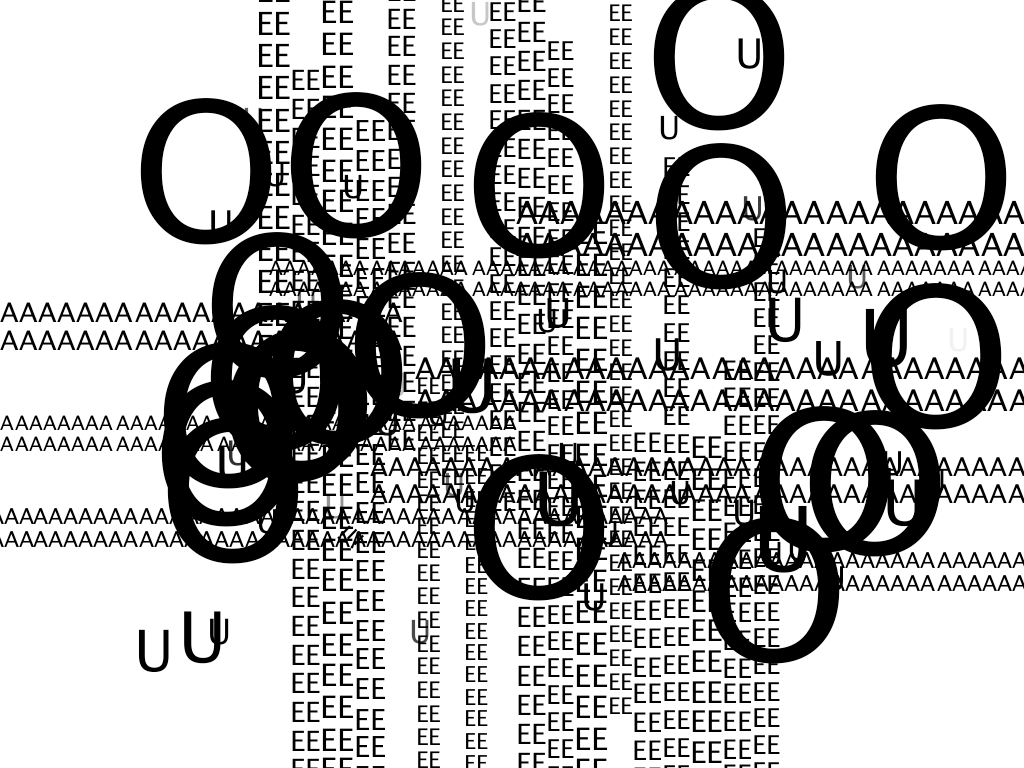

This one used a physics engine to simulate realistic arcing trajectories for small words made up of vowels:

More than one scene can be enabled at any given time, with different vowels, determined by the control panel:

The vowel-detection system was developed in Max/MSP using Linear Predictive Coding analysis and filtering.

The Processing code for the scenes and the control panel are on github.